Tunnel fire source depth estimation technology based on infrared and visible light image fusion

-

摘要:

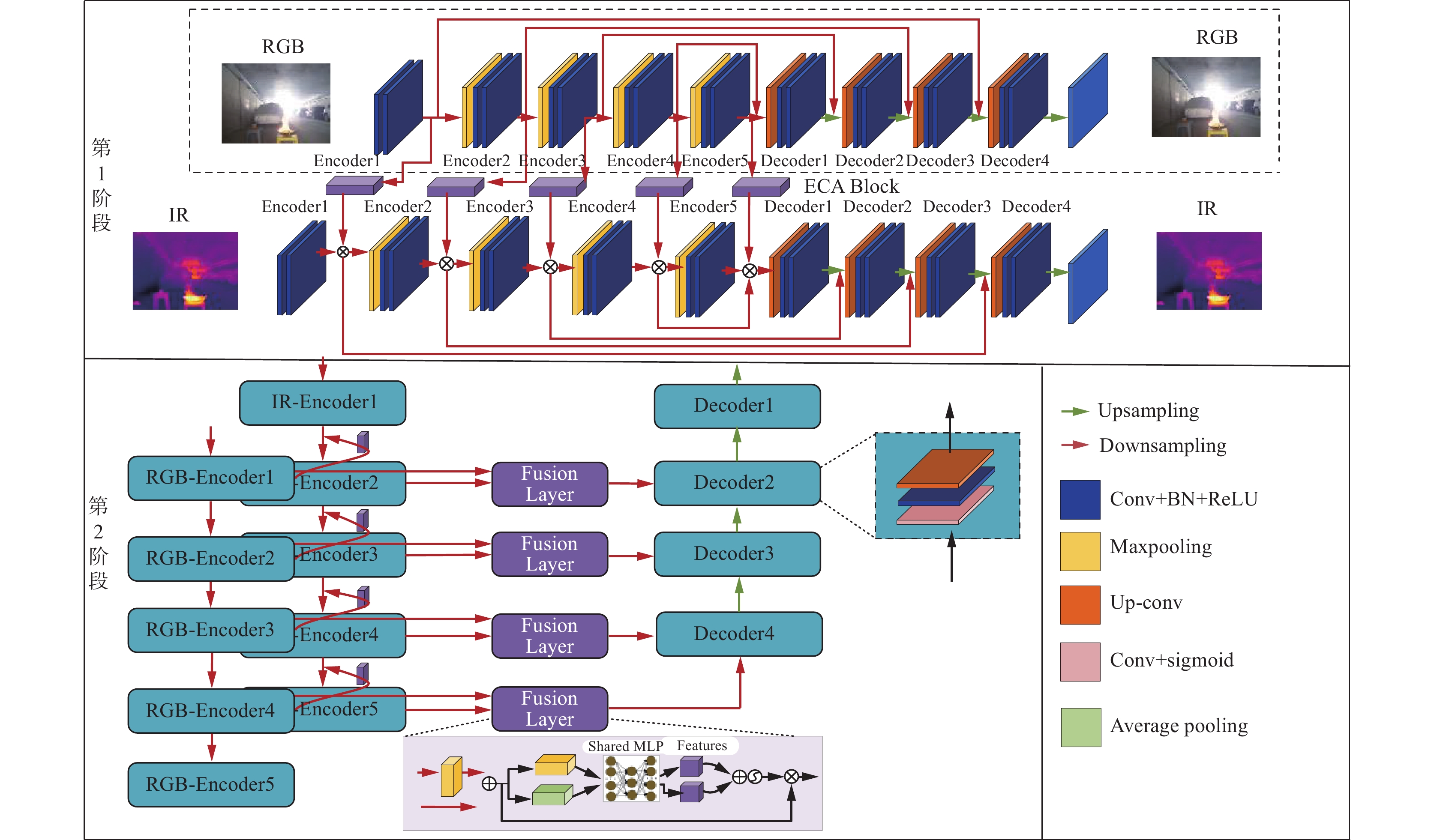

矿井巷道、交通隧道等场景受火灾威胁的困扰,采用基于图像的智能火灾探测方法在火灾初期快速识别其发生位置具有重要意义。现有方法面临时间序列一致性问题,且对相机姿态变化具有高度敏感性,在复杂动态环境中的识别性能下降。针对该问题,提出一种红外(IR)和可见光(RGB)图像融合的隧道火源深度估计方法。引入自监督学习框架的位姿网络,来预测相邻帧间的位姿变化。构建两阶段训练的深度估计网络,基于UNet网络架构分别提取IR和RGB特征并进行不同尺度特征融合,确保深度估计过程平衡。引入相机高度损失,进一步提高复杂动态环境中火源探测的准确性和可靠性。在自制隧道火焰数据集上的实验结果表明,以Resnet50为骨干网络时,构建的隧道火源自监督单目深度估计网络模型的绝对值相对误差为0.102,平方相对误差为0.835,均方误差为4.491,优于主流的Lite-Mono,MonoDepth,MonoDepth2,VAD模型,且精确度阈值为1.25,1.252,1.253时整体准确度最优;该模型对近景和远景区域内物体的预测效果优于DepthAnything,MonoDepth2,Lite-Mono模型。

Abstract:Tunnel scenarios such as mine roadways and traffic tunnels are often plagued by fire threats. It is of great significance to use image-based intelligent fire detection methods to rapidly identify the fire's location during its early stages. However, existing methods face the problem of times series consistency and are highly sensitive to changes in camera pose, resulting in decreased detection performance in complex and dynamic environments. To address this issue, a tunnel fire source depth estimation method based on infrared (IR) and visible light (RGB) image fusion was proposed. A pose network within a self-supervised learning framework was introduced to predict pose changes between adjacent frames. A two-stage training depth estimation network was constructed. Based on the UNet network architecture, IR and RGB features were extracted and fused at different scales, ensuring a balanced depth estimation process. A camera height loss was introduced to further enhance the accuracy and reliability of fire source detection in complex and dynamic environments. Experimental results on a self-constructed tunnel flame dataset demonstrated that when Resnet50 was used as the backbone network, the absolute relative error of the constructed tunnel fire source self-supervised monocular depth estimation network model was 0.102, the square relative error was 0.835, and the mean square error was 4.491, outperforming mainstream models such as Lite-Mono, MonoDepth, MonoDepth2, and VAD. The overall accuracy was optimal under accuracy thresholds of 1.25, 1.252, and 1.253. The model had better prediction results for objects in close-up and long range areas than DepthAnything, MonoDepth2, Lite-Mono models.

-

-

表 1 5种单目深度估计网络模型上的定量结果

Table 1 Quantitative results of five monocular depth estimation network models

模型 骨干网络 AbsRel SqRel RMS RMSELog $ \delta $<$\theta $的像素占比 $\theta $=1.25 $\theta $=1.252 $\theta $=1.253 Lite−Mono[26] Resnet18 0.115 0.903 4.863 0.198 0.867 0.939 0.970 MonoDepth[13] Resnet18 0.112 0.847 4.720 0.197 0.880 0.950 0.980 MonoDepth2[8] Resnet18 0.112 0.836 4.594 0.183 0.884 0.956 0.974 VAD[27] Resnet18 0.110 0.863 4.684 0.187 0.883 0.962 0.982 本文模型 Resnet18 0.105 0.840 4.489 0.184 0.893 0.975 0.975 Lite−Mono[26] Resnet50 0.110 0.883 4.663 0.190 0.877 0.945 0.981 MonoDepth[13] Resnet50 0.110 0.843 4.710 0.196 0.880 0.950 0.980 MonoDepth2[8] Resnet50 0.108 0.836 4.594 0.181 0.887 0.956 0.984 VAD[27] Resnet50 0.106 0.868 4.584 0.181 0.893 0.962 0.983 本文模型 Resnet50 0.102 0.835 4.491 0.180 0.892 0.967 0.984 表 2 消融实验结果

Table 2 Ablation experimental results

模型 参数量/MiB AbsRel SqRel RMS RMSELog $ \delta $<$\theta $的像素占比 $\theta $=1.25 $\theta $=1.252 $\theta $=1.253 Base 14.8 0.112 0.836 4.594 0.183 0.884 0.956 0.974 Base+FU 16.7 0.113 0.844 4.560 0.185 0.889 0.961 0.970 Base+LH 15.1 0.111 0.847 4.483 0.192 0.889 0.952 0.974 Base+FU+LH 17.0 0.105 0.840 4.489 0.184 0.893 0.975 0.975 -

[1] 周正兵. 公路隧道火灾安全监控系统研究[D]. 武汉:武汉理工大学,2010. ZHOU Zhengbing. Study of road tunnel fire safety monitor system[D]. Wuhan:Wuhan University of Technology,2010.

[2] 刘逸颖. 基于深层卷积神经网络的单目图像深度估计[D]. 西安:西安电子科技大学,2019. LIU Yiying. Depth estimation from monocular image based on deep convolutional neural networks[D]. Xi'an:Xi'an University of Electronic Science and Technology,2019.

[3] 邵浩杰,汪康康,梁佳韦. 基于多尺度特征及Wasserstein距离损失的单目图像深度估计[J]. 信息与电脑(理论版),2023,35(4):76-78,106. SHAO Haojie,WANG Kangkang,LIANG Jiawei. Depth estimation of monocular images based on multi-scale features and wasserstein distance loss[J]. Information & Computer,2023,35(4):76-78,106.

[4] 温静,安国艳,梁宇栋. 基于CNN特征提取和加权深度迁移的单目图像深度估计[J]. 图学学报,2019,40(2):248-255. WEN Jing,AN Guoyan,LIANG Yudong. Monocular image depth estimation based on CNN features extraction and weighted transfer learning[J]. Journal of Graphics,2019,40(2):248-255.

[5] 王泉德,张松涛. 基于多尺度特征融合的单目图像深度估计[J]. 华中科技大学学报(自然科学版),2020,48(5):7-12. WANG Quande,ZHANG Songtao. Monocular depth estimation with multi-scale feature fusion[J]. Journal of Huazhong University of Science and Technology(Natural Science Edition),2020,48(5):7-12.

[6] 程德强,张华强,寇旗旗,等. 基于层级特征融合的室内自监督单目深度估计[J]. 光学精密工程,2023,31(20):2993-3009. DOI: 10.37188/OPE.20233120.2993 CHENG Deqiang,ZHANG Huaqiang,KOU Qiqi,et al. Indoor self-supervised monocular depth estimation based on level feature fusion[J]. Optics and Precision Engineering,2023,31(20):2993-3009. DOI: 10.37188/OPE.20233120.2993

[7] ZHOU Tinghui,BROWN M,SNAVELY N,et al. Unsupervised learning of depth and ego-motion from video[C]. IEEE Conference on Computer Vision and Pattern Recognition,Honolulu,2017:1851-1858.

[8] GODARD C,MAC AODHA O,FIRMAN M,et al. Digging into self-supervised monocular depth estimation[C]. IEEE/CVF International Conference on Computer Vision,Seoul,2019:3828-3838.

[9] WANG Lijun,WANG Yifan,WANG Linzhao,et al. Can scale-consistent monocular depth be learned in a self-supervised scale-invariant manner[C]. IEEE/CVF International Conference on Computer Vision,Montreal,2021:12707-12716.

[10] LUO Xuan,HUANG Jiabin,SZELISKI R,et al. Consistent video depth estimation[J]. ACM Transactions on Graphics,2020,39(4). DOI: 10.1145/3386569.3392377.

[11] 刘香凝,赵洋,王荣刚. 基于自注意力机制的多阶段无监督单目深度估计网络[J]. 信号处理,2020,36(9):1450-1456. LIU Xiangning,ZHAO Yang,WANG Ronggang. Self-attention based multi-stage network for unsupervised monocular depth estimation[J]. Journal of Signal Processing,2020,36(9):1450-1456.

[12] 陈莹,王一良. 基于密集特征融合的无监督单目深度估计[J]. 电子与信息学报,2021,43(10):2976-2984. DOI: 10.11999/JEIT200590 CHEN Ying,WANG Yiliang. Unsupervised monocular depth estimation based on dense feature fusion[J]. Journal of Electronics & Information Technology,2021,43(10):2976-2984. DOI: 10.11999/JEIT200590

[13] GODARD C,MAC AODHA O,BROSTOW G J. Unsupervised monocular depth estimation with left-right consistency[C]. IEEE Conference on Computer Vision and Pattern Recognition,Honolulu,2017:270-279.

[14] 吴寿川,赵海涛,孙韶媛. 基于双向递归卷积神经网络的单目红外视频深度估计[J]. 光学学报,2017,37(12):254-262. WU Shouchuan,ZHAO Haitao,SUN Shaoyuan. Depth estimation from monocular infrared video based on Bi-recursive convolutional neural network[J]. Acta Optica Sinica,2017,37(12):254-262.

[15] 杜立婵,覃团发,黎相成. 基于单目双焦及SIFT特征匹配的深度估计方法[J]. 电视技术,2013,37(9):19-22. DOI: 10.3969/j.issn.1002-8692.2013.09.006 DU Lichan,QIN Tuanfa,LI Xiangcheng. Depth estimation method based on monocular bifocal imaging and SIFT feature matching[J]. Video Engineering,2013,37(9):19-22. DOI: 10.3969/j.issn.1002-8692.2013.09.006

[16] 李旭,丁萌,魏东辉,等. VDAS中基于单目红外图像的深度估计方法[J]. 系统工程与电子技术,2021,43(5):1210-1217. DOI: 10.12305/j.issn.1001-506X.2021.05.07 LI Xu,DING Meng,WEI Donghui,et al. Depth estimation method based on monocular infrared image in VDAS[J]. Systems Engineering and Electronics,2021,43(5):1210-1217. DOI: 10.12305/j.issn.1001-506X.2021.05.07

[17] 曲熠,陈莹. 基于边缘强化的无监督单目深度估计[J]. 系统工程与电子技术,2024,46(1):71-79. QU Yi,CHEN Ying. Unsupervised monocular depth estimation based on edge enhancement[J]. Systems Engineering and Electronics,2024,46(1):71-79.

[18] XIAN Ke,CAO Zhiguo,SHEN Chunhua,et al. Towards robust monocular depth estimation:a new baseline and benchmark[J]. International Journal of Computer Vision,2024,132(7):2401-2419. DOI: 10.1007/s11263-023-01979-4

[19] BI Hongbo,TONG Yuyu,ZHANG Jiayuan,et al. Depth alignment interaction network for camouflaged object detection[J]. Multimedia Systems,2024,30(1). DOI: 10.1007/S00530-023-01250-3.

[20] WANG Qilong,WU Banggu,ZHU Pengfei,et al. ECA-net:efficient channel attention for deep convolutional neural networks[C]. IEEE/CVF Conference on Computer Vision and Pattern Recognition,Seattle,2020:11534-11542.

[21] RONNEBERGER O,FISCHER P,BROX T. U-net:convolutional networks for biomedical image segmentation[M]//Lecture Notes in Computer Science. Cham:Springer International Publishing,2015:234-241.

[22] XU Han,MA Jiayi,JIANG Junjun,et al. U2Fusion:a unified unsupervised image fusion network[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence,2022,44(1):502-518. DOI: 10.1109/TPAMI.2020.3012548

[23] WILLMOTT C,MATSUURA K. Advantages of the mean absolute error (MAE) over the root mean square error (RMSE) in assessing average model performance[J]. Climate Research,2005,30:79-82. DOI: 10.3354/cr030079

[24] 叶晓文,章银娥,周琪. 基于改进重构损失函数的生成对抗网络图像修复方法[J]. 赣南师范大学学报,2023,44(6):106-111. YE Xiaowen,ZHANG Yin'e,ZHOU Qi. Image inpainting method based on improved reconstruction loss function[J]. Journal of Gannan Normal University,2023,44(6):106-111.

[25] WANG Zhou,BOVIK A C,SHEIKH H R,et al. Image quality assessment:from error visibility to structural similarity[J]. IEEE Transactions on Image Processing,2004,13(4):600-612. DOI: 10.1109/TIP.2003.819861

[26] ZHANG Ning,NEX F,VOSSELMAN G,et al. Lite-mono:a lightweight CNN and transformer architecture for self-supervised monocular depth estimation[C]. IEEE/CVF Conference on Computer Vision and Pattern Recognition,Vancouver,2023:18537-18546.

[27] XIANG Jie,WANG Yun,AN Lifeng,et al. Visual attention-based self-supervised absolute depth estimation using geometric priors in autonomous driving[J]. IEEE Robotics and Automation Letters,2022,7(4):11998-12005. DOI: 10.1109/LRA.2022.3210298

[28] YANG Lihe,KANG Bingyi,HUANG Zilong,et al. Depth anything:unleashing the power of large-scale unlabeled data[C]. IEEE/CVF Conference on Computer Vision and Pattern Recognition,Seattle,2024:10371-10381.

-

期刊类型引用(5)

1. 范鹏宏,聂百胜. 煤单轴压缩介电常数与电磁辐射同步响应实验研究. 矿业安全与环保. 2024(05): 61-65 .  百度学术

百度学术

2. 郭嵘,梁义维,夏蕊. 煤水介质下圆环链大应变在线监测系统设计. 仪表技术与传感器. 2023(03): 50-54 .  百度学术

百度学术

3. 刘超,王璐,刘文忠,王钢. 传感信息强偏差特征双层分解下的智能电表自动化检定. 自动化与仪表. 2023(05): 9-12+31 .  百度学术

百度学术

4. 汤小燕,陈昕怡,郑雷清,牛林林,张伟杰,齐佳新. 含瓦斯煤岩导电特性研究综述. 科学技术与工程. 2023(25): 10617-10624 .  百度学术

百度学术

5. 郑学召,孙梓峪,郭军,张铎,陈刚,何芹健. 矿山钻孔救援多源信息探测技术研究与应用. 煤田地质与勘探. 2022(11): 94-102 .  百度学术

百度学术

其他类型引用(5)

下载:

下载: