Human posture detection method in coal mine

-

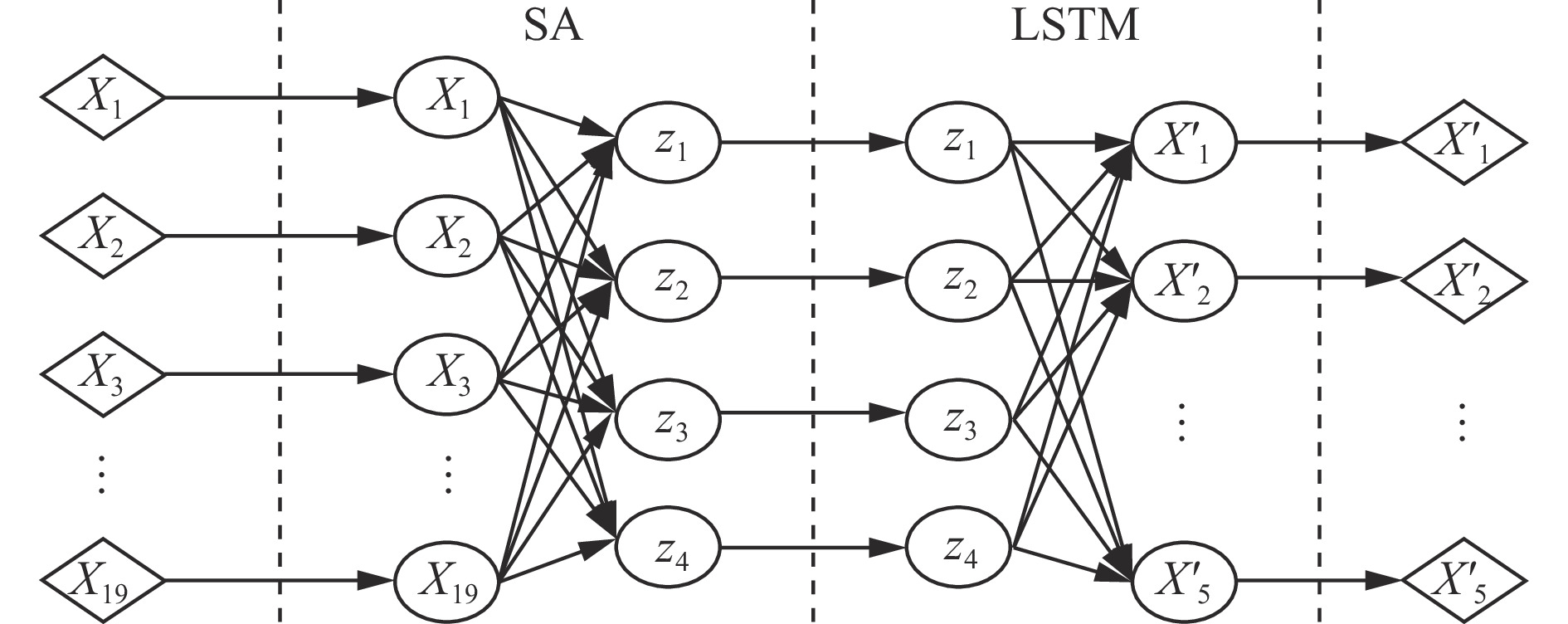

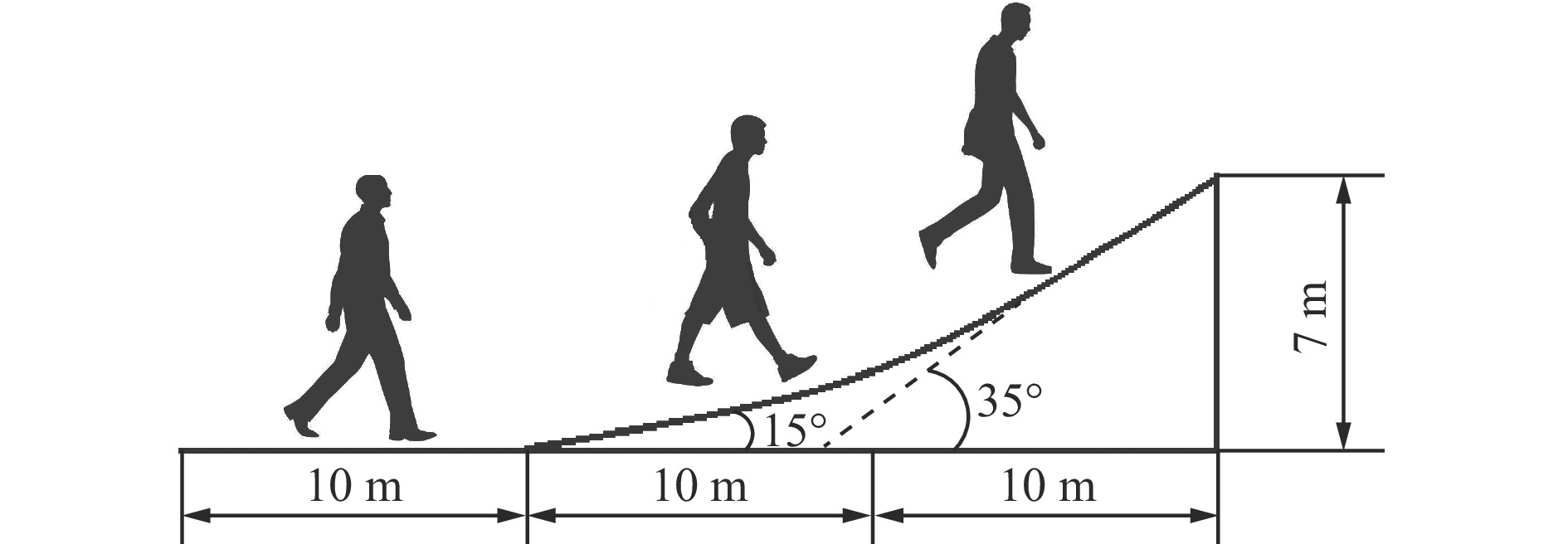

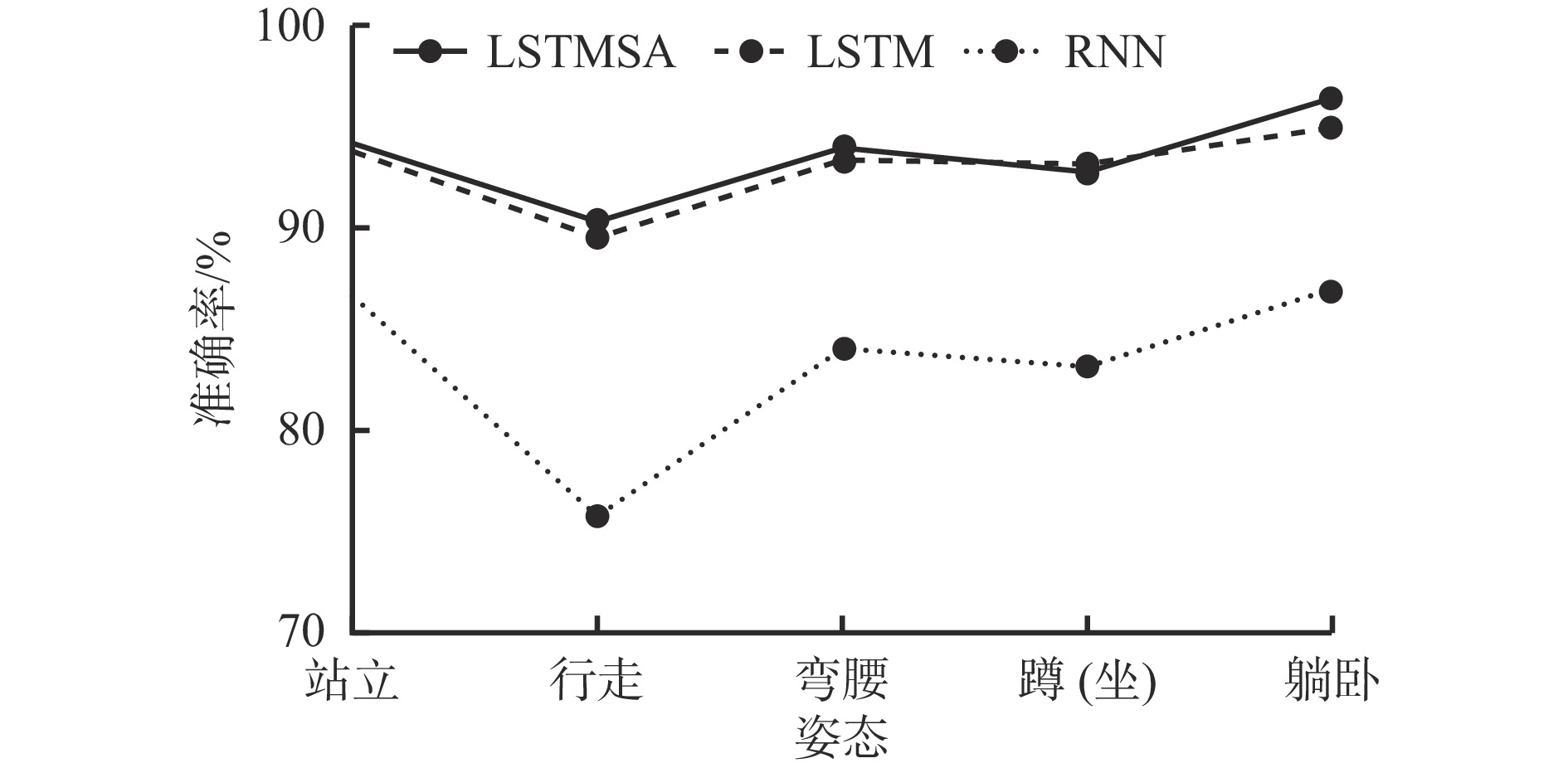

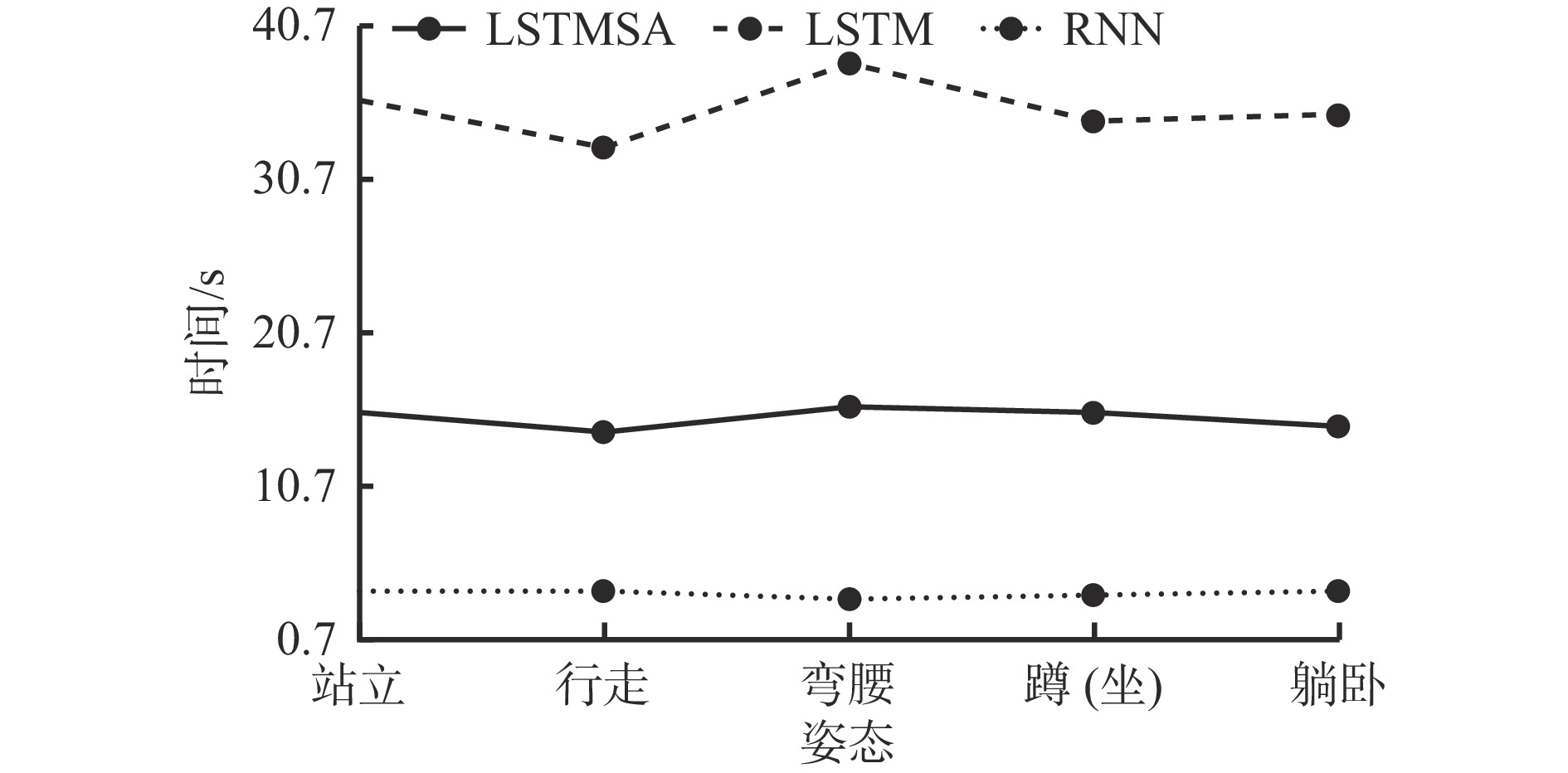

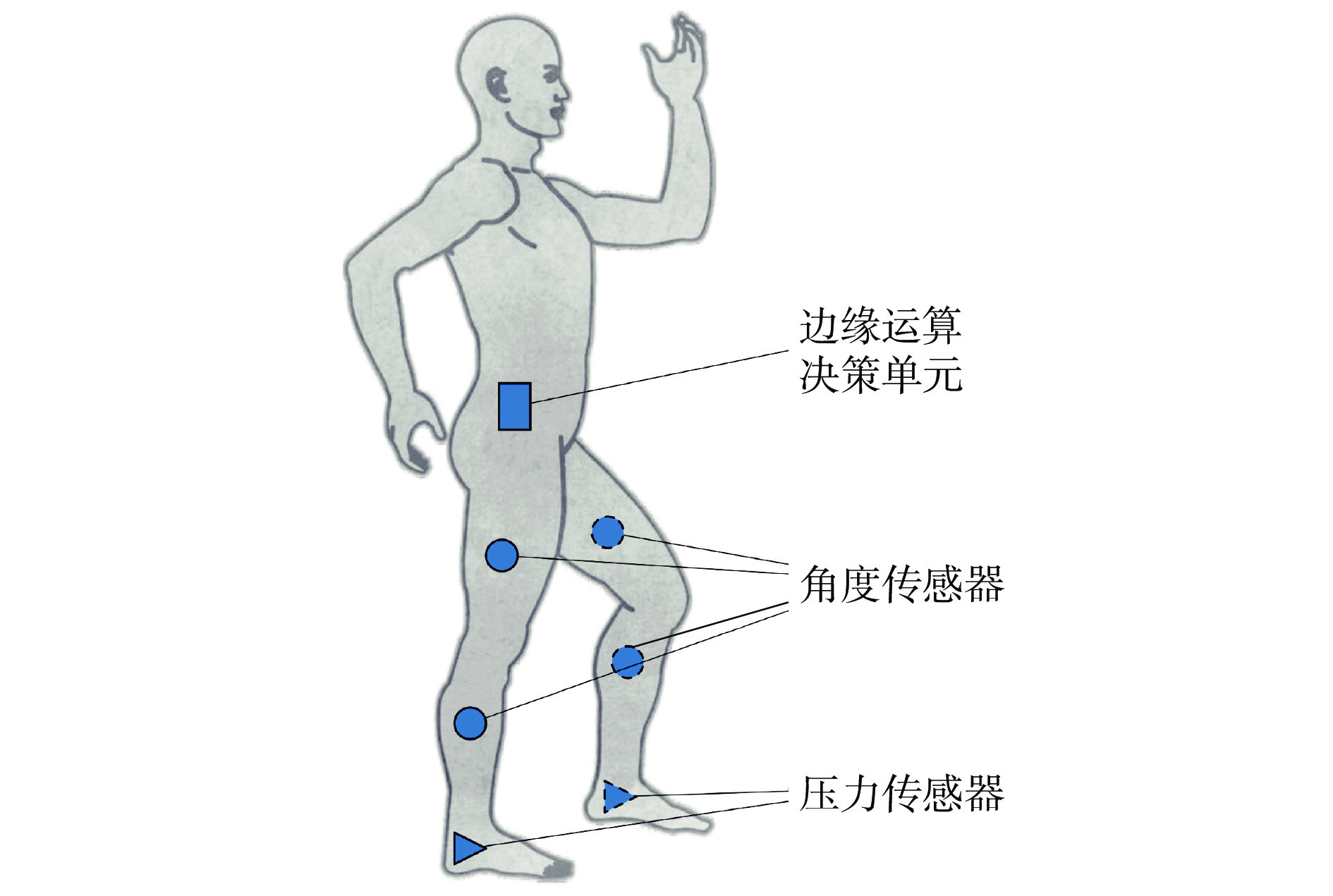

摘要: 煤矿井下作业人员姿态检测可为灾害预警和事故救援提供有效信息。井下人员姿态复杂多样,且为时间序列数据,现有人体姿态检测方法或难以处理连续相关的姿态数据,或因算法复杂需配置独立计算机而导致实时性较差。针对上述问题,提出了一种基于改进长短期记忆网络(LSTM)的煤矿井下人体姿态检测方法,通过压力传感器、角度传感器获取井下人员脚底压力、腰腿部角度等姿态数据,由人员随身携带的便携式边缘运算决策单元进行姿态判别,实现井下工作人员站立、行走、弯腰、蹲(坐)、躺卧5种姿态的实时检测。为降低人体姿态原始采样数据维度,提高运算效率,对LSTM进行改进,设计了长短期记忆稀疏自编码器(LSTMSA),先由稀疏自编码器(SA)对原始采样数据进行特征提取,实现降维,再由LSTM进行人体姿态检测。在实验室环境下采集人体姿态数据,分别对LSTMSA、LSTM、循环神经网络(RNN)进行训练和测试,结果表明:在相同的试验设置和采样数据下,LSTMSA对5种人体姿态检测的准确率均达到90%以上,与LSTM接近且大于RNN;LSTMSA运算时间较LSTM缩短50%以上,满足矿井人体姿态检测实时性要求。Abstract: The posture detection of underground personnel can provide effective information for disaster warning and accident rescue. The postures of the underground personnel are complex and diverse and they are time series data. The existing human posture detection methods are difficult to process continuous related posture data. And the real-time performance is poor due to the complex algorithm and the need to configure an independent computer. In order to solve the above problems, a human posture detection method in coal mine based on improved long short term memory network(LSTM) is proposed. The pressure sensor and angle sensor are used to obtain the posture data of underground personnel, such as foot pressure, waist and leg angle, etc. The portable edge operation decision unit carried by the personnel can discriminate the posture. It can realize the real-time detection of five postures of underground personnel, such as standing, walking, bending, squatting (sitting) and lying down. In order to reduce the dimension of the original sampled data of human posture and improve the compute efficiency, LSTM is improved. The long short term memory sparse autoencoder(LSTMSA) is designed. The characteristics of the original sampled data is extracted by the sparse autoencoder(SA) to reduce the dimension, and then the human posture is detected by the LSTM. Human posture data are collected in the laboratory environment, and LSTMSA, LSTM and recursive neural network(RNN) are trained and tested respectively. The results show that under the same experimental settings and sampling data, the accuracy of LSTMSA for five kinds of human posture detection reaches more than 90%, which is close to LSTM and greater than RNN. The computing time of LSTMSA is shortened by more than 50% compared with LSTM, which meets the real-time requirements of human posture detection in coal mine.

-

-

表 1 煤矿井下人体姿态分类

Table 1 Human posture classification in coal mine underground

姿态 描述 站立 默认初始状态,身体呈1条直线并直立 行走 双腿交替前移,在1个周期内呈现比较有规律的角度、幅度

变化,身体姿态基本直立弯腰 下半身肢体直立,上半身躯体弯曲,与下半身肢体呈一夹角 蹲(坐) 双腿弯曲,臀部、双腿和上身形成3个面,臀部与双腿和上身

呈一定角度;上半身可直立或前倾躺卧 身体呈1条直线并全身着地 表 2 人体姿态数据采样值定义

Table 2 Definition of sampled human posture data

数据采集装置 传感器名称 采样值符号 边缘运算决策单元 三轴角度传感器1

(输出三轴角度)X1(腰部x轴角度),X2(腰部

y轴角度),X3(腰部z轴角度)压力传感器(左脚,

含4个压力应变片)压力应变片1

(左脚脚尖)X4(左脚脚尖压力) 压力应变片2

(左脚内侧)X5(左脚内侧压力) 压力应变片3

(左脚外侧)X6(左脚外侧压力) 压力应变片4

(左脚脚跟)X7(左脚脚跟压力) 压力传感器(右脚,

含4个压力应变片)压力应变片5

(右脚脚尖)X8(右脚脚尖压力) 压力应变片6

(右脚内侧)X9(右脚内侧压力) 压力应变片7

(右脚外侧)X10(右脚外侧压力) 压力应变片8

(右侧脚跟)X11(右脚脚跟压力) 角度传感器

(左大腿)三轴角度传感器2

(输出三轴角度)X12(左腿x轴角度),X13(左腿

y轴角度),X14(左腿z轴角度)角度传感器

(右大腿)三轴角度传感器3

(输出三轴角度)X15(右腿x轴角度),X16(右腿

y轴角度),X17(右腿z轴角度)角度传感器

(左小腿)单轴角度传感器1

(输出一轴角度)X18(左小腿与左大腿夹角) 角度传感器

(右小腿)单轴角度传感器2

(输出一轴角度)X19(右小腿与右大腿夹角) 表 3 LSTMSA人体姿态检测结果

Table 3 Human posture detection results of LSTMSA

指标 姿态 站立 行走 弯腰 蹲(坐) 躺卧 检测为站立组数 1881 90 31 0 3 检测为行走组数 76 1 804 24 34 22 检测为弯腰组数 38 33 1 878 98 7 检测为蹲(坐)组数 3 61 62 1 854 43 检测为躺卧组数 2 12 5 14 1 925 准确率/% 94.05 90.2 93.9 92.7 96.25 运算时间/s 15.37 14.23 15.88 15.43 14.67 表 4 LSTM人体姿态检测结果

Table 4 Human posture detection results of long short-term memory(LSTM)

指标 姿态 站立 行走 弯腰 蹲(坐) 躺卧 检测为站立组数 1 875 105 60 11 2 检测为行走组数 83 1 789 29 22 13 检测为弯腰组数 26 57 1 866 92 12 检测为蹲(坐)组数 10 38 37 1 862 75 检测为躺卧组数 6 11 8 13 1 898 准确率/% 93.75 89.45 93.3 93.1 94.9 运算时间/s 35.74 32.69 38.12 34.4 34.89 表 5 RNN人体姿态检测结果

Table 5 Human posture detection results of recursive neural network(RNN)

指标 姿态 站立 行走 弯腰 蹲(坐) 躺卧 检测为站立组数 1 731 237 61 60 29 检测为行走组数 143 1 513 49 78 69 检测为弯腰组数 50 152 1 686 147 41 检测为蹲(坐)组数 35 57 129 1 663 124 检测为躺卧组数 41 41 75 52 1 737 准确率/% 86.55 75.65 84.3 83.15 86.85 运算时间/s 3.78 3.86 3.29 3.54 3.72 -

[1] 刘浩, 刘海滨, 孙宇, 等. 煤矿井下员工不安全行为智能识别系统研究[J/OL]. 煤炭学报: 1-13[2022-01-11]. DOI: 10.13225/j. cnki. jccs. 2021.0670. LIU Hao, LIU Haibin, SUN Yu, et al. Research on intelligent recognition system of unsafe behavior of coal mine underground employee[J/OL]. Journal of China Coal Society: 1-13 [2022-01-11]. DOI: 10.13225/j.cnki. jccs. 2021.0670.

[2] BOURDEV L, MAJI S, BROX T, et al. Detecting people using mutually consistent poselet activations[C]//European Conference on Computer Vision, Marseille-France, 2010: 168-181.

[3] 郑莉莉,黄鲜萍,梁荣华. 基于支持向量机的人体姿态识别[J]. 浙江工业大学学报,2012,40(6):670-675,691. DOI: 10.3969/j.issn.1006-4303.2012.06.017 ZHENG Lili,HUANG Xianping,LIANG Ronghua. Human posture recognition method based on SVM[J]. Journal of Zhejiang University of Technology,2012,40(6):670-675,691. DOI: 10.3969/j.issn.1006-4303.2012.06.017

[4] 黄心汉,苏豪,彭刚,等. 基于卷积神经网络的目标识别及姿态检测[J]. 华中科技大学学报(自然科学版),2017,45(10):7-11. HUANG Xinhan,SU Hao,PENG Gang,et al. Object identification and pose detection based on convolutional neural network[J]. Journal of Huazhong University of Science and Technology(Natural Science Edition),2017,45(10):7-11.

[5] 许志强. 基于深度学习的实时人体姿态估计系统[D].秦皇岛:燕山大学, 2019. XU Zhiqiang. Real-time human posture estimation system based on deep learning study[D]. Qinhuangdao: Yanshan University, 2019.

[6] 钱志华,高陈强,叶盛. 采用元学习的多场景教室学生姿态检测方法[J]. 西安电子科技大学学报(自然科学版),2021,48(5):58-67. QIAN Zhihua,GAO Chenqiang,YE Sheng. Method for detection of a student's pose in a multi-scene classroom based on meta-learning[J]. Journal of Xidian University(Natural Science),2021,48(5):58-67.

[7] SONG S K, JANG J, PARK S. A phone for human activity recognition using triaxial acceleration sensor[C]//Proceedings of the 26th IEEE International Conference on Consumer Electronics, Las Vegas, 2008: 117-124.

[8] 曹玉珍,蔡伟超,程旸. 基于MEMS加速度传感器的人体姿态检测技术[J]. 纳米技术与精密工程,2010,8(1):37-41. DOI: 10.3969/j.issn.1672-6030.2010.01.008 CAO Yuzhen,CAI Weichao,CHENG Yang. Body posture detection technique based on MEMS acceleration sensor[J]. Nanotechlogy and Precision Engineering,2010,8(1):37-41. DOI: 10.3969/j.issn.1672-6030.2010.01.008

[9] STAMATAKIS J,CREMERS J,MAQUET D,et al. Gait feature extraction in Parkinson's disease using low-cost accelerometers[J]. Conference of the IEEE Engineering in Medicine and Biology Society,Boston,2011:7900-7903.

[10] 陈超强,蒋磊,王恒. 基于SAE和LSTM的下肢外骨骼步态预测方法[J]. 计算机工程与应用,2019,55(12):110-116,154. DOI: 10.3778/j.issn.1002-8331.1811-0315 CHEN Chaoqiang,JIANG Lei,WANG Heng. Gait prediction method of lower extremity exoskeleton based on SAE and LSTM neural network[J]. Computer Engineering and Applications,2019,55(12):110-116,154. DOI: 10.3778/j.issn.1002-8331.1811-0315

[11] 李海涛. 基于ARM9和RFID的井下人员定位系统研究与设计[D]. 武汉: 武汉理工大学, 2010. LI Haitao. Research and design of underground personnel positioning system based on ARM9 and RFID [D]. Wuhan: Wuhan University of Technology, 2010.

[12] 叶锦娇,温良,王红尧,等. 下井人员生命体征传感器的设计[J]. 工矿自动化,2013,39(1):52-54. DOI: 10.7526/j.issn.1671-251X.2013.01.014 YE Jinjiao,WEN Liang,WANG Hongyao,et al. Design of vital signs sensor for coal miner[J]. Industry and Mine Automation,2013,39(1):52-54. DOI: 10.7526/j.issn.1671-251X.2013.01.014

[13] 罗会兰,王婵娟,卢飞. 视频行为识别综述[J]. 通信学报,2018,39(6):169-180. LUO Huilan,WANG Chanjuan,LU Fei. Survey of video behavior recognition[J]. Journal on Communications,2018,39(6):169-180.

[14] 柴铎,徐诚,何杰,等. 运用开端神经网络进行人体姿态识别[J]. 通信学报,2017,38(增刊2):122-128. CHAI Duo,XU Cheng,HE Jie,et al. Inception neural network for human activity recognition using wearable sensor[J]. Journal on Communications,2017,38(S2):122-128.

[15] REN Shaoqing,HE Kaiming,GIRSHICK R,et al. Faster R-CNN:towards real-time object detection with region proposal networks[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence,2017,39(6):1127-1149.

下载:

下载: